Watch the Usable AI™ webinar

Recently our experts discussed the power and potential of prioritizing people, not just tech in our webinar on Usable AI™.

Usable AI™ is DK&A's proven design framework that puts customers first when designing and delivering AI-driven products.

Watch the webinar recording:

No time to watch? Get the highlights here:

The AI paradigm shift

This is the iPhone moment of AI.

Our Head of Usable AI Aki Ranin opened the webinar on the topic of the AI paradigm shift.

What does it mean?

Remember 2007 when Apple introduced the iPhone? It changed the way we learn, shop, and communicate.

Putting a supercomputer connected to the internet into everyone’s pocket required us to rethink everything.

It’s about to happen again.

This time with AI.

Investments in generative AI are investments in growth

McKinsey highlighted the evolving landscape of AI investments in their recent survey.

Businesses have been investing in AI to cut costs and enhance efficiency.

What are high performers in AI doing differently?

They look beyond cost-saving measures: they're exploring new business, revenue sources, and innovative AI-driven products.

They have shifted the focus towards Generative AI, and its potential to drive business growth.

AI-driven products

“Uber is a taxi plus a smartphone. But what is a taxi plus AI? What is a hotel plus AI? What is anything plus AI?”

— Aki Ranin, Head of Usable AI, DK&A

Established internet companies like Netflix and Uber are built on previous technology paradigms. What happens when you combine existing industries with AI? How can AI-driven products transform these sectors?

That’s the essence of AI-driven products.

Making AI usable

We at DK&A want to make using AI practical and user-friendly. Usable.

We want to use AI tools pragmatically when we design digital products.

How do we get the AI to behave as we want it to behave? To really fulfill some task or job that we've assigned to it?

That’s the art of AI-driven user experience.

Currently, there’s a gap between human-centered design, AI prompts, and API-first AI.

Generative AI requires a new approach to designing user experiences.

The new technology has the possibility of building out great conversational UIs. It also opens up new ways of interacting with data and systems.

Our Usable AI™ framework puts people first, not just tech. We design and deliver AI-enabled products that drive value with top-notch customer experience.

“We believe that customer experience drives customer value, and customer value drives company value. Both customer experience and user experience should be on the top of your list when you're thinking about AI-enabled products.”

—Florian Plank, Chief Strategy Officer, DK&A

Our Chief Strategy Officer Florian Plank talked about designing AI-driven products.

We at DK&A take the same approach whether we design AI-driven products or classic, traditional products. We prioritize the customer experience.

Usable AI™ framework

Our Usable AI™ framework is a stepped, linear process. It leads us all the way from product discovery to definition, and from definition to delivering something that we can test, and eventually also use in production.

The framework consists of several elements, such as:

Exploration sprint: We identify opportunities for AI in your business and evaluate their potential impact.

Validation sprint: We focus on specific use cases and validate their feasibility with AI.

AI personas: When we think about the AI persona, we think about what people need. AI persona could be e.g. a hotel concierge, not a booking app that uses AI. It could be e.g. a nurse in health insurance services, not an insurance agent. The UX equivalent of AI persona is a customer persona.Conversation flows: We define how your users interact with AI-driven products through conversation. The UX equivalent of conversation flow is a use case.

Prompt design: We translate all the previous work into natural language prompts or code for AI interactions. The UX equivalent of prompt design is wireframes.

Action maps: We break down actions and dependencies so that we can implement AI solutions effectively. The UX equivalent of action maps is information architecture.

Our Usable AI™ framework will be open-source later this year.

You asked, we answered

We wrapped up the webinar with Q&A. Here are the short answers to audience questions about Usable AI.

What does the AI transformation timeline look like compared to mobile transformation?

AI transformation is expected to happen in 3-5 years. Experts predict that AI transformation will happen much faster than mobile transformation. Unlike the decade-long mobile transition, AI adoption is expected to occur swiftly within the next few years.

What kind of roles are involved in Usable AI projects?

Traditional design roles such as UX designers, content designers, and developers remain indispensable in the Usable AI design paradigm. While some upskilling may be necessary, these roles are evolving rather than undergoing a complete transformation.

It’s an evolution, not a revolution.

We need both AI designers and engineers. AI solutions demand a mix of design skills and technical engineering expertise. Cross-functional teams with both designers and engineers are required.

When to choose an exploration sprint? What about the validation sprint?

Exploration sprint is the choice for discovering opportunities.

The validation sprint is suited for prototyping.

Exploration sprints systematically map the opportunity space while validation sprints test defined use cases. Together they identify possibilities and validate solutions.

AI might be wrong. How to deal with the risk of errors?

Our Usable AI framework provides structure to limit errors. A structured process reduces the risks of incorrect behavior by the AI. Creative risks and accuracy need to be balanced. When AI is given higher creativity the chances of incorrect or nonsensical outputs might increase.

How to express brand value with AI assistants?

Carefully crafted language adds brand identity and value.

Brand tone of voice makes LLM conversations reflect company values. Define what's important to you, what you focus on, and then, of course, also to step away from things that you feel are outside of your brand value definition. Then instruct your AI assistant.

How will we at DK&A use Usable AI in our own processes?

We will be applying Usable AI just as we are doing it for our customers. We are applying the tools of design thinking to our own organization, and we are thinking about our organization in the same terms. We also have a tool called the Usable AI workshop, which is designed to be used with our teams internally.

Thanks to our webinar host and business designer Mona Moilanen, Aki and Florian, our partners, and of course everyone at the audience for making the webinar a success!

Transcript

This transcript has been automatically generated.

Intro

Mona Moilanen: Good morning from Helsinki and good afternoon to our guests from Singapore. Welcome to the DK&A webinar on Usable AI. We're absolutely thrilled to have you join us today for what promises to be an insightful exploration into the world of generative AI and user experience.

My name is Mona. I'm a business designer at DK&A, and today I have the pleasure of hosting all of you.

Artificial intelligence is on everybody's mind, and while it's certainly the source of great fear and great excitement all at once, many companies are trying to start and identify real use cases in their industry for what has become known as generative AI.

And as it seems, that's a lot harder than you would initially think.

We have spent the last 45 minutes at DK&A defining an approach to designing AI-driven products today without sacrificing what really matters, which is customer value.

Today we're here to tell you all about it.

For the next 45 minutes, we will be diving right into the evolving landscape of generative AI and also look at the next generation of design tools that we can use to deliver real value instead of technology-first.

I have here with me in the studio our head of Usable AI, Aki Ranin, who will be opening the webinar on the topic of the AI paradigm shift.

Aki, would you say a few words about yourself to our audience?

Aki Ranin: Sure. Thanks, Mona. Hello, everyone.

So I suppose I find myself in 2023 now rebranding myself as a 20-year veteran in AI.

I started in AI really in the computer vision days, early 2000s in university. But at the time, I think the technology wasn't really ready for the big stage.

I moved to do other things in IT as a consultant, manager, etc. And when I started my startup career as a founder, that's when the machine learning paradigm really kicked off.

So this would be sort of 2015-2016.

And I started spending more and more focus on it.

And over the years now, I find myself again as a consultant, author, lecturer on these topics. Increasingly, I would say over the years, hands-on as well.

But like everybody else probably in the field, the last 12 months now relearning everything from the context of generative AI.

Mona: Wonderful. Thank you so much, Aki.

And then we have on the online space, joining our Chief Strategy Officer Florian Plank, who will be then talking more about designing AI-driven products.

Hi, Florian. Can you hear us?

Florian Plank: Yeah, I'm really excited to be with you, even if it's only remotely.

I've spent the last 20 years building digital products and the last few years trying to bring an AI approach to user experience.

And I just got a huge help from our friends at OpenAI and Cole earlier or later last year.

And now I have a chance to talk about a really exciting new approach.

Mona: Great. Looking forward to that. Later on, we will also wrap up this webinar with a Q&A session. And I definitely want to encourage you all to participate. Be active. Ask questions from our speakers.

There is a message box on the broadcast site where you can ask questions and also leave us a little contact request if you would like to continue the discussions later on. But time is of the essence. So let's get started.

Should we jump right in, Aki?

Go ahead.

Aki Ranin: The AI paradigm shift

Aki: Let's do it.

So we're going to start by talking about this AI paradigm shift and some useful analogies, some ways of thinking, some ways of structuring, approaching this problem and what it sort of means for maybe the next few years ahead for, let's say, businesses globally of varying sizes and shapes.

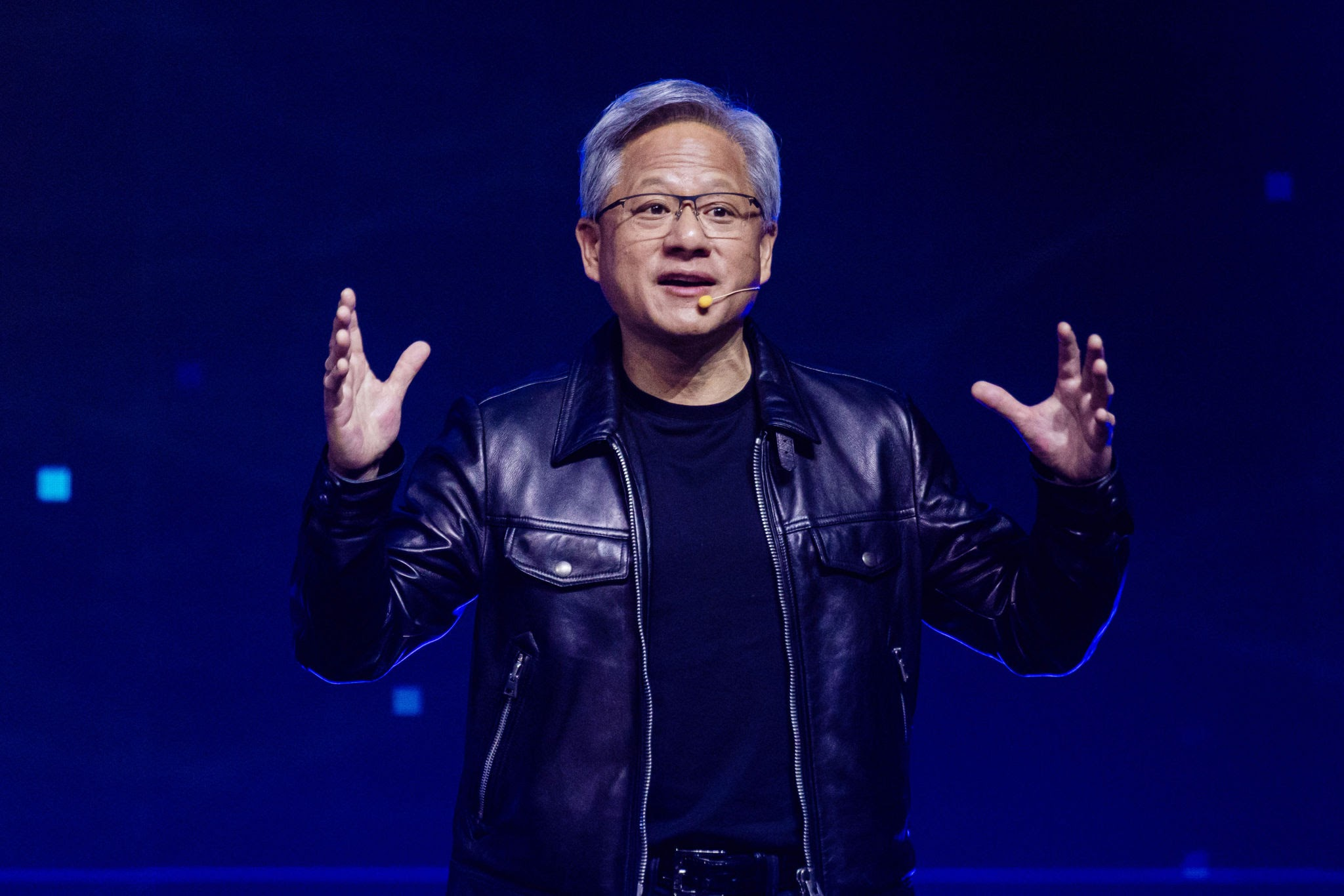

The analogy we started from, which I found to be very robust over the last few months working on this topic, is the iPhone and mobile transformation. So recently, Jensen Huang, the CEO of NVIDIA, said that this is really the iPhone moment of AI.

So what does that mean?

What is the iPhone moment?

Well, if we play back now to, say, 2007, 2010, we all working in this industry probably have some recollections of what that meant.

We went from the feature phone and web, laptop, desktop paradigm into a world where we had to shrink our websites into this little handheld device.

And everybody was thinking: “Hey, I can play my music, maybe some nice games."

You had the new sort of touch-based interface.

But what does this mean for my business?

So then fast forward maybe five years, you have the iPad, eventually you have the Apple Watch, et cetera, sort of all these different smart devices.

And we started to talk about mobile-first design, mobile transformation, and suddenly there's an app for everything and every business needs an app themselves.

So if something similar is happening, of course, now is the time to define what does AI transformation look like?

What is AI-first design?

And this is really the task that we set ourselves on.

In reality, we're starting from this, which is an empty text box. We've all played with ChatGPT.

I'm sure all of you have as well, with differing levels of, let's say, success in terms of finding ways to actually apply it in your daily life, whether it's personal or business.

But the trick is that you sort of don't know what you can do with it. It sort of seems like it can do a lot of things.

It's very human-like in certain ways and in other ways, not quite like you would engage with a human.

We know from, say, Twitter threads and Reddit that it's potentially even superhuman in some areas.

It can do really great results in various exams for biology, law school, et cetera, maybe not so great at math. And we're sort of uncovering these abilities.

But at the end of the day, do you copy-paste your business information into the text box? Can you upload your documents? How do you bring it into your work processes?

That's really what we're trying to all figure out.

If we look at what the, let's say, leading businesses, large businesses globally have been doing over the past few months, this was a survey run by McKinsey before the summer break.

And they tried to sort of split the cohort into, let's say, businesses looking at AI and then these so-called AI high performers.

What I find interesting is in the machine learning paradigm, the main focus areas for business were traditionally like optimization.

So you're trying to save costs, whether it's chatbots or other types of algorithms.

You're developing your own models, trying to make some process slightly faster, slightly easier, slightly cheaper, whether it's customer-facing or internal.

And then there's this broad category of things like insights and analytics.

But if we look at what the high performers are doing, it sort of tells you something about what's new with generative AI in that their focus is actually on new business, new revenue sources, new products.

And that's something that you really didn't see as much in the machine learning paradigm.

And if you look at the Internet companies of today, the FAANG stocks, you've got your Netflix's, Uber's, Google's, Airbnb's, TikTok's, etc.

These are sort of the household names of the Internet. And these are all based on the previous paradigms.

You might say the cloud paradigm and the mobile paradigm.

So if you think, OK, what is Uber? Uber is a smartphone plus taxi. Easy to say now, like, oh, why didn't I think of that?

But at the time it was not clear at all on what was possible and what you could do with these kinds of new paradigms.

And now we're asking the question, well, what is taxi plus AI? What is a hotel plus AI? What is anything plus AI?

And that's kind of this AI driven product approach that we think is going to really transform not only the Internet in maybe the next five years, but also potentially disrupt a lot of businesses, potentially, including yours.

And this is the real task that we're focusing on specifically in generative AI is what does an AI driven product look like?

So we've come up with something that we think is helpful, we're branding it as Usable AI. And really, the point of this is to say, let's not just do this.

So if you look at the screen here, you can see this is a snapshot from Midjourney, which is one of the more convoluted user experiences currently available in generative AI.

You have to log into there and register into their Discord server, go into some channel, post a message, and then suddenly you start seeing cool images.

So that's like a very weird experience that's not really applicable to your business.

So we need something in between where we can start from our traditional human centered design processes and principles, but actually apply them to these prompts.

So there's quite a big gap.

And this is what we're trying to address.

Now, why do we think LLMs are so important?

I think there's one sort of misconception.

You've seen a lot of media articles, maybe over the summer talking about this idea of a stochastic parrot, which is just this broad idea that, hey, these LLMs just kind of repeat what they found on Wikipedia.

So there's kind of like a database lookup of various sources like Twitter, Reddit, and Wikipedia. That's false.

Actually, what's inside these large language models are very high dimensional relationships between words, concepts.

In this case, they're called embeddings between different tokens.

But this little image here on the right kind of tells you the story that inside these neural networks, just like inside human brains, you've got various concepts like man, woman, king, queen.

But the sort of secret sauce here is that unlike human brains, the dimensionality, the relationships between these concepts can be extremely high dimensional, not three dimensional.

Like in this toy example, it could be one thousand dimensional, one million dimensional.

So this is like a very simple thing for an LLM to do, which is to say, OK, what's the female version of a king?

It's not just remembering that it's a queen, it can fundamentally understand that the relationship is similar between a man, a woman, and a king and a queen.

And again, as said, this can be applied in very high dimensional spaces.

And this is why it can have these sorts of superhuman abilities to perform amazingly well on tasks, which can be very intellectually complicated for humans and then seemingly kind of fall short on others.

We've been talking exclusively about ChatGPT here, and most of the online conversation and dialogue is very focused on OpenAI and Microsoft and their sort of relationship and ecosystem that they've built.

But it's important to note that this is not the only game in town.

Say over the last few months, there's a few interesting ones.

I find myself using Claude, which is currently only available in the UK and US, but it performs much better in terms of uploading your documents, whether it's spreadsheets or visual files like PDF slides, Word documents.

And you can ask questions like summarization from those sources. Very useful.

Then there's LLAMA, which is from MetaFacebook.

And the way I like to think of this is if, let's say, this Microsoft, ChatGPT, OpenAI ecosystem, closed ecosystem behind the API, this is kind of your Apple and iPhone, then LLAMA from Facebook is more like your Android.

So it's an open source, large language model, which you can literally sign up and download.

Anybody can do it. There's no limitations per se.

And this means that it's now your job to manage these models, deploy these models, and take care of the privacy.

But it's a completely different approach to what you've seen and really worth taking seriously.

The last thing worth mentioning is Google. They are coming out with something called Gemini from their DeepMind team, which is suspected to be the first truly multimodal platform, which means it can do equally well images and text in and out. So that could be something to expect before Christmas.

So why are we talking about this?

DK&A is traditionally known as a design agency, really developing the best digital products in the world, using the traditional UX methodology and frameworks.

Now, what we see on Twitter in terms of what's happening with GPT is a lot of, let's say, so-called AI engineering.

So it's engineers who hack together things based on the API, which they have access to from OpenAI, for example.

Now, this is great. This is innovation.

But it's also not how we're used to doing customer and product-centric design.

We also are not really making active use right now of the traditional machine learning field because these people work on models.

And if you're using ChatGPT, you don't really have access to the model.

Everything's behind the API.

That's, of course, different if you're using open source models.

But by far, currently, most of the focus is on AI engineering on top of the API. And what we're saying is, if you have AI engineers, that's great. But we also need AI designers as the counterpart.

And that's how we bring back the focus to the customer and the product. So where did we start?

We started from really the science of conversation. If you play back the history of AI, we had chatbots before. They were not really good.

This goes probably all the way down to like the mid-90s. There were attempts by IBM to establish something called conversational UX.

But it was quite tricky because you had to really hard-code the human-like speech or the human-like interactions.

Now, if we go even further back, there's something called conversational analysis, which started from the '60s in terms of analyzing, interpreting, structuring conversations for things like telephone service, customer service that we used to use before chatbots.

Now, the beauty of large language models is that actually the one skill that they really possess is this human-like interaction.

So on the screen, you see these little brown dots called sequence pairs. That's your hello, your greetings, your question and answering kind of structures. We get that out of the box.

The challenge is, how do we structure the conversation so that we actually get the AI to behave as we want it, to fulfill some task, some job that we've assigned to it? And this is now the new art of AI-driven UX, or in our sense, what we're talking about is Usable AI.

And now I'm going to switch over to Florian so he can tell you all about how that works.

Mona: Wonderful. Thank you so much, Aki.

This was very, very insightful and exciting for sure.

And before we jump over to Florian, I want to remind all of you, if you have questions to Aki, then please write them down to the chat box on the broadcast site.

And then later on, we will look at them and answer some of the questions.

But now, for sure, Florian, let's look at how to design AI-driven products.

Florian Plank: Designing AI-driven products

Florian: Let's do that.

At DK&A, we take the same approach to designing AI-driven products that we have taken to building classic or traditional products.

We have one core belief that carries through everything we do. We believe that customer experience drives customer value, and we believe that customer value drives company value.

It's the best and direct line of doing what's right for your business and for your customers in one go.

If you believe that that's true in general, then it's going to hold true here as well.

And then customer experience and user experience both should be very much at the top of your list when you're thinking about AI-enabled products.

However, as Aki has already pointed out, generative AI requires, in many cases, a new approach to exactly doing that, to designing these experiences.

On one hand, this technology now lends itself maybe for the first time ever really to building out great conversational UIs.

This hasn't really been the case so far. I'm sure many of you have made experiences with this. And then it also opens up new possibilities for new ways of interacting with data and systems that you already have.

So with our usable AI framework, we're trying to do both. We're trying to tackle both challenges.

All right, so where do we begin?

The approach that we take with usable AI on a really high level is a stepped, linear process. It leads us all the way from product discovery to definition to delivering something that we can test or eventually also use in production.

The way you get started kind of depends a little bit on your organization's maturity level.

AI didn't just fall out of the sky. We have been working with machine learning for a long time. So depending on where you are, you might want to start a little bit later with what we call the AI validation sprint.

If you're really new to this and if you're just kind of trying to get the lay of the land, we're proposing to start one step earlier with a tool that we call the usable AI exploration sprint.

This is not something I'm going to spend too much time talking about today.

In short, it's a process that brings structure and rigor to discovering opportunities in terms of AI for your business, for your team, and then evaluate them in regards to their business value and their potential impact.

So if you're looking to answer: “What should I be doing? Why should I be doing this?”, then this is a good starting point. It's really heavily research-based.

It's analytical and clear in the approach, and it helps you map out the opportunity space and then pick something to focus on. So ideally, when you start with the second phase, the AI validation sprint, which is something we're going to be talking about today, you would already have done that, and you would have already mapped that space. As we don't have that luxury here in this setting today, I'm going to give you a rough overview of what others are doing and how we see the space in general.

So let's take a look at some of the use cases. At the moment, we're grouping use cases into three main groups. I would be very surprised if these groups remain stable over time. I think that there's a lot of merging and shifting going on already, and we're seeing this happening already, but that's what we're seeing at the moment. It's also worth noting that we're talking a lot about gen AI, generative AI, but we're also heavily focusing on the LLMs. This is what we're going to be doing here as well. I'm sure there will be great use cases, and already there are some great use cases for some of the diffusion models and other machine learning-enabled technologies, but for now, I think we're going to focus on the LLMs primarily.

All right, what's there? So we've got assistance. That's the thing that got us excited about AI initially and the LLMs.

It's essentially what you get with ChatGPT or InflectionsPy, which I can highly recommend if you haven't tried that. It's a consistent, contextual experience that happens in a chat window. It's a way for you to communicate with your customers if you were to implement one of those in the tone of your business and your brand. It's a way for you to bring your business processes through a conversational experience to your customers.

More interesting, I think it already gets at the copilots. The copilots often have custom UIs, or they're integrated with other software products that you may already use. I think you very likely have heard of what Microsoft is doing there, both with the Office 365 suite and their copilot there, or then with GitHub's copilot as well. So those are custom integrations, and they often maybe add a conversational element as an afterthought. They focus on getting you to do what you're doing faster, better, more efficiently, automate away the low-value tasks that you have to do, and focus on great stuff like summarization, search, automation, analysis, things like this that help you to get faster and better at what you're already doing. And then finally, there's the experts.

That's often, again, a conversational experience that has access to your data. So there you see

already overlap with the assistants. That's an experience like you can find it with Claude2, which Aki already mentioned, and then now since yesterday also with Google Spark that has access to your documents, your data lakes, your data sets that you use in the company, and then is able to enable you to interrogate these data sets and find what you're looking for in a way that you need it at the moment. Again, here, I think we're also going to be seeing a lot more custom UI.

All right, with that out of the way, let's take a look at the AI validation sprint. So unsurprisingly, if you have read our invite, this follows traditional design thinking methodology on a really high level, at least. So we're looking to take our favorite tools, empathy, ideation, experimentation, validation, all of these things into the space to build truly user-centered solutions. That's what we're here for. And we do this following the logical steps of exploration, scoping, building, testing, iteration, and so forth. Under the hood there, then you're going to find a few components which you likely haven't seen before. They're here marked in red.

These are some of the tools that we have developed now over the last, well, about the first half of this year. And those will be the tools that we are now using to create AI-enabled products and services for our customers. These tools come in various shapes and sizes. They are workshop exercises, canvases, guidelines, and so forth. We are planning on making a lot of this open source by the end of the year. So then you can try it out with your teams as well. If you, in the meantime, want to talk about anything in more depth, you know where to find us. We won't have too much time to go into detail on every one of those.

I'll give you a quick overview of what's there, what's in the box. So let's start with the backbone first. Over the course of a usable AI project, we aim to work our way from what we call an AI persona, probably haven't heard that before.

We're going to walk you through this in a second, what this means, all the way through a number of steps to the way we communicate with the LLMs, which is prompts, as you're well familiar.

Along the way, we're going to try and discover what skills and abilities this AI persona has to have. We also try to understand what the intentions and goals of our customers are.

We try to understand what outputs we need to generate in order to satisfy these goals.

And then finally, how to actually implement it in terms of the underlying technology.

So what's an AI persona? If you think about AI at the moment, it's very easy to get stuck at an image that looks a lot like Chet Chippity. However, I think it would be very surprising if a few years from now, or maybe even months from now, this would still be the case. These LLMs lend themselves really well to natural conversation, but they enable a lot more stuff, and that often will be tucked away under specialized interfaces. That's at least what we believe and see now. And this is the reason why we have come up with this concept of an AI persona.

It's a hack, it's a secret weapon that allows us to maintain a customer-centric viewpoint, regardless of how the experience actually will shape up to look like, whether there's a chat or not. This AI persona is, and this is how we do this, defined in terms of a customer need and not an existing product or an existing company. So if an insurance company, for example, wants to get a customer to, for example, get permission to see a specialist or a special doctor, then we are taking a customer-centric viewpoint and define a nurse persona rather than an insurance agent's persona, because the customer's actual need is not to talk to the insurance agency, they just want to get better.

I think we're going to walk through a little bit now through an example and try to understand a little bit what's underneath the hood, but this already gives you a sense of how we're thinking about this.

A kind of a hack or a trick you can use to kind of push your brain into this mode of thinking about an AI persona is to think about the sort of larger constructs that your customers interact with when they're dealing with your product and service. You could think about, for example, I'm dealing in home security, what would home security as an AI look like? Or what would my bank account look like as an AI, or even money?

Okay, now let's take a look at what's under the hood, and we're going to take a quick peek at some of these components that I've mentioned earlier. Let's imagine a customer wants to book a hotel room. Then the AI persona may not be designed necessarily as a travel agent, but rather a concierge, because the underlying customer need is to spend a nice time in a nice location, right? So with the solution framed this way, we can now start to define what we call a conversation flow. Throughout this conversation flow, we're going to ask a number of questions and generate a number of outputs. These outputs may depend on each other, like they may have to come in a certain order because we may have to ask one thing first and then reference it later.

They may also depend on external information. So we're going to specify one or multiple conversation flows, and these will then allow us to make up our whole experience.

Now, you're going to ask yourself, “Conversation flow products don't have to actually be chats?”

And that is absolutely correct.

We believe that conversation flows, because LLMs use natural language under the hood anyway, are the right way to interact with this technology. So we always have to define a conversation flow, no matter whether there is an actual chat window in the experience or not, because we will have to interact with the LLM this way.

Once we have done that, we are going to move on and try and take the perspective of the technology under the hood, and we're going to define something we call action maps.

An action map basically allows us to break down what we have now defined in terms of outputs and then group it or cluster it in ways that make sense for the implementation. Some of these actions may actually appear in the final experience as conversation, others may be an email, others may be a notification, things like this.

Some of these actions, as we already mentioned, may depend on external information. Some of these actions may make use of tools, they may make API requests, and so forth. With the actions defined this way, we can now start to get a sense of how we will need to translate the customer need into something the LLM can actually help us with and work through.

And then there is one more piece that I'm going to talk about a little bit today.

There's a bunch more, but I want to get a chance to focus on this for a moment.

And that is something that you have already experienced yourself.

That's what we call prompt design. You've probably heard of the term prompt engineering. We're taking a slightly different angle here. We also believe, and this is actually based on experience, that designers are very well suited to do this, especially content designers.

During the prompt engineering phase, we take everything we've defined up to this point, the AI persona, the conversation flows, the actions, the dependencies, state changes throughout the systems, which is not something we've touched on in depth, and we translate it into code, or quote unquote code, that we use to interact with the LLMs.

And that may mean actually writing code, or then this might mean writing natural language

prompts or prompt templates. Actual code means also that at this point, for the first time throughout the process, actively engineers are joining the team.

So this is the moment when our AI or Usable AI team starts to collaborate more closely, because now we need to start to take what we have designed and then put it into an actual experience that we can test and validate. With that, I'm going to give you a really quick overview over what this actually might look like in reality.

You may have come across diagrams like this before, variations thereof. If we think of AI-enabled products away from the fuzzy design language that I've used up to this point, then this is roughly what it looks like. You're going to be getting a lot of offers and a lot of great tools from some of the big companies like OpenAI, Microsoft, Google, and so forth. And that is great. These are the kind of things that we integrate with in the backend.

This is not what we focus our work on in a usable AI project. If you want to use an open source LLM, that's just as fine of a choice. It really depends on the use case. Our work in the usable AI context happens in this golden backend middle, where all the orchestration brings together the various moving parts of this kind of product.

So we're, of course, spending a lot of time building out the actual client UI. Those are the kind of things that your customers will interact with, whether this is a chat experience or some mixed model experience or something different altogether. We're going to bring together the external tools, external APIs. We're going to bring in the data and the existing documents that we may want to enable the LLM to search through and access. And then, of course, there's a lot of orchestration happening sort of tucked away behind the curtain, closer to the LLM that is much more technical in nature and that the AI engineers are prepared to handle.

I hope this gives you a rough idea of what the usable AI process looks like. I said there is a ton of components here that we could talk about. We spent a number of months now working through this and coming up with a process that I'm really excited about. If you want to talk more about this, ask a question now or get in touch with us later.

I'll be more than happy to jump on a call. Thank you.

Q&A

Mona: Great. Thank you so much, Florian.

Sounded really interesting and thank you for shining some light on how to marry the AI value proposition with actually how to design solutions using it. Now we have some time for our Q&A session and please get your typing fingers ready if you haven't already.

The questions will be moderated by our wonderful Hanna behind the screens and if you don't see or hear your question answered right here right now, then don't be scared. We will definitely use those as input for blog posts and other material later on so you can stay on the lookout for materials from us.

Additionally, you will also receive a recording of this webinar so you can at any time revisit these discussions. But I'm looking at you, Hanna. Do we have questions from the audience?

The first question, I will address this actually to you, Aki.

“Do you have any thoughts about the timeline of this transformation in comparison to mobile transformation?”

Aki: Yeah, so like I was referring, I think the mobile transformation in my experience when I was doing that kind of work as a consultant, mainly in Southeast Asia but to some degree also in US and other regions, I think it really sort of happened certainly faster in other industries. But I think the time frame you're talking about is roughly like 2010 to 2020. Of course, it hasn't reached every nook and cranny. I think there's still places where we're still using paper and desktop and all of that, especially in things like the financial system. But largely speaking, if you go on the internet, you have typical daily customer experiences with whatever businesses you would interact with. Almost all of that is now like mobile first. And so the mobile transformation has saturated. I think there's a few reasons to expect this could be much faster for AI. One, I think is the level of investment. I think if I think backwards to mobile transformation, yeah, like Apple invested a lot of money. Google invested a lot of money. Microsoft. There are companies that certainly facilitated that transformation. But now I was just looking at the news the other day that I saw Ernst & Young invested 1.4 billion over the last 18 months in terms of developing their AI products. It's like, well, that did not happen with mobile transformation, certainly not this quickly because it's the last 18 months. So it's like most companies were not even thinking of generative AI 18 months ago. And so I think it just paints a picture of like, OK, these guys are running quickly now. I think not everybody has to take that approach of like, hey, let's go all in on generative AI. But I think it's also an area where it pays not to sleep or wait. I think you want to start taking steps, building that maturity. As Florian says, there is an element of maturity with all technologies. So I think getting into that space makes sense. But I think we're looking more at like a three to five year transformation as it relates to the high level Internet, what you're going to see. So basically hold on to your hats.

Mona: Great. Then we have some more questions coming in. Next up by Kimmo.

“Do you already have some case examples to share?”

Aki: Yeah, so I think that's something you'll probably see shared over the next few months. So we're working on a number of projects already. But as these things happen sequentially, I think like right now, if you look online, of course, like open AI has some great case studies for different industries from like finance to healthcare, et cetera. So I think that's a good place to start if you want to see some examples, because, of course, those companies were probably given preferential beta access. And so they were kind of getting a jump start on that. But I think probably by the end of the year, you're going to start seeing case studies from us, but also very widely from all manner of companies. So I think, yeah, just watch the news, I guess.

Mona: Sounds good. Some more from Tommi. Can you talk a bit about the roles involved?

Sorry.

“What kind of traditional design roles will you find working on projects like this?”

Yeah, Florian, do you want to jump in on this one?

Florian: Yeah, sure. So as said, we believe that a lot of the tools and approaches that we use for digital product development today, or have been using pre AI are very much applicable here. So we're going to, of course, have to upskill and train people currently working in digital product development. But I think a lot of the things, user experience design, customer experience design, strategic design, content design in particular, and then also full stack development will be applicable here with a bit or in some cases, a bit more training, which is something we're focusing heavily on now. I believe that the usable AI approach is not a jump. It's linear, consistent, like evolution, not a revolution. So I think these roles are very much applicable to what we're going to be doing next.

Mona: Sounds good. Some more questions now from Thomas.

“Could you elaborate a bit more about in what situation would it be best to choose an exploration sprint and when”, sorry, my voice seems to be failing me today. “And when validation sprint?”, sorry.

Aki: Yeah, so I can take a first stab. When I think back to mobile transformation, I was doing a lot of this kind of work as a consultant back at that time. And of course, all of this kind of goes back to human centered design, the double diamond, all these kinds of familiar constructs in a sense, even before we had mobile. But I think what when I think of those projects that we were actually doing, I think it's like one or the other, if you have a business problem in mind already, or you're already exploring, maybe like you've decided like, hey, in this business process or in this problem, it makes sense for us to like, have a look, start here. If you have something like that that's roughly scoped out, then I think it makes sense to go into validation because that's the whole idea. You're trying to validate whether we can solve this problem or somehow make this process better using these generative AI tools. And the end result is something tangible, like a prototype.

But in many cases, it's kind of like a different situation where like, hey, the board or management team has tasked us like, hey, we need to make some movement here. We need to start walking so that we don't get left behind in space. We need to start making some first hires, et cetera. And in this kind of scenario, I think it makes sense to start with something more like the exploration sprint. So back in the mobile days, it would be the same thing. You kind of look at the business from that lens to examine, you do like stakeholder interviews, customer interviews, and basically try to discover like, hey, these are the best use cases where these technologies can kind of help your business. And that's again, like both of these are just tools by which you're increasing that maturity because at the end of the day, this kind of consultative strategy work is not how you create business impact. At the end of the day, you're going to have to then deploy these across the business and scale. But that's something that comes with time. I think, you know, right now, certainly our focus is on this kind of work. And I think it will be for maybe the next one to two years.

And then I think we start moving broadly in a place of maturity globally with businesses where people are more confident, both legally and technologically, to actually deploy these at scale and sort of like public facing like internet use cases and not just like generic ChatGPT. But I think, you know, there's a while to go and it's good to start in this kind of exploration and validation first.

Mona: Great, thank you. Another question. This is an interesting one.

“We also have to take into account that AI may be wrong. How to exclude errors? How to deal with it? Any error factor in the result?”

Aki: Yeah, so this kind of broad problem has been there with machine learning. We used to talk about bias a lot. So there's, you know, great news articles about things like racist algorithms, etc. So it's a known problem. I think the one new thing about generative AI is this hallucination problem, which is because it is, as the name implies, it's generative. It has, let's say, a broader ability to do sort of wild out of the box things. This is both a feature and a bug at the same time. If you didn't have this generative ability, you wouldn't be able to do all these amazing, you know, capabilities and skills that we see online right now. So it's a matter of controlling that. There are technical, let's say, levers, like the so-called temperature parameter in these models, which means like you can sort of make it very creative, which also means, you know, more likely to hallucinate or like very boring and professional, which means it's probably less capable to do like creative tasks.

But then at the same time, I think this whole like usable AI process is meant to structure, you know, like I was saying before, to provide the structure and the conversation and it's Florian was explaining from the persona, the skills, the role definitions, all the way down to the like intent and goals that we want to fulfill in these conversational flows. That's the point, right? Like you want to know what kind of customer intent you want to serve. It's not like, oh, anything like, oh, we can, you know, discuss berry picking and, you know, the upcoming presidential primaries in the US. No, like we're trying to fulfill some kind of insurance process here. So you're predefining that and anything outside of that scope, you're basically going to cut off. And this is something that you can instruct the models to do pretty well. They can kind of redirect you to maybe talk to a human advisor, or they can redirect you to the customer service, like a website, if you kind of go outside of scope. So this is really where the prompt design, prompt engineering all come together to ensure, of course, alongside with testing that you don't have this risk. But this is also why I think initially we're going to see more of this, as these use cases are not sort of like public consumer facing processes. I think it's better and safer to start with things like internal kind of like employee facing solutions, productivity use cases, because again, like the worst case scenario is not immediately going to be on like the front page of Reddit or Twitter or worst case like the actual news.

Mona: Great. All right. I would say let's go with one or two more. Like I said before, time is of the essence, but right now, “How to express brand value via AI assistant?”

Aki: Yeah, this is a pretty interesting one that we've been thinking about the past few months. And actually, the first thing we did when we started this project was to define a set of principles. So maybe, Florian, you want to talk about kind of how we can use tone of voice and brand as a sort of language tool?

Florian: Yeah, I mean, this, of course, very much depends on the experience you're designing. If you are designing a more traditional user experience that maybe uses components that we're using today across the channels that we're building for, then the tools that you have at your disposal today, they will apply. What becomes a lot more important now, I think, is things, as like you said, things like the tone of voice section in your brand guide that you have been ignoring for way too long and now should really dig out if you haven't done it yet. I think a lot of the great companies, of course, are not ignoring them. These are the kind of things that you need to bring into the conversation if there is an actual conversation happening in your experience and make sure that your assistant or your expert actually communicates in the way you intend them to communicate. And in that sense, I think it's good to think of them as another part, another employee, another extension of your company that needs to translate what's important to you, what you focus on, and then, of course, also to step away from things that you feel like are outside of your brand value definition.

So luckily, a lot of the tools that we have defined for our brands will apply, but it will require some work to actually implement them.

Mona: Super. And as I'm seeing now, we are pretty much out of time. But if you do have time to stay online with us for a few more minutes, we can then take this one more question before leaving you guys today.

So, “How does DK&A plan to leverage AI in their design methodologies and processes?”

Aki: Yeah, Florian, this is a classic kind of dog food question. So how is the dog food tasting?

Florian: How will we use Usable AI in our own processes?

Aki: Yeah, like our guests, whether it's tools, et cetera.

Florian: Right. Yeah. We have gone through this exercise and we will be going through this exercise. We have a tool called the AI workshop, the Usable AI workshop, which is designed to be used with our teams internally. And we will start to roll it out across our teams. We have already done that in some parts. I think we will try to identify just as our customers will, these areas in which we can still do more in addition to what we're doing today. And we will use our very own tools for this that we have designed here, because that's what we have done before.

We are applying the tools of design thinking to our own organization, and we are thinking about our organization in the same terms. So we will be applying usable AI just as we are doing it for our customers. We will be applying it to DK&A as well.

Mona: Great. Thank you, Florian. All right. I think it's pretty much time for us to wrap up today. We will be sending the recording to everyone. Thank you so much for participating actively. The discussion was great. Thank you to Aki. Thank you to Florian. And definitely stay on the lookout for more blog posts and social media posts from us. Like Aki said earlier, we are in the process of developing some POCs and projects with our customers. So you will definitely be hearing more from us quite soon.

Thank you once again. It was a pleasure to host you today. And I want to leave you with the words of have a very nice rest of the week.